Is g Real or Just Statistics? A Monologue with a Testable Prediction

At the Start: What Is g?

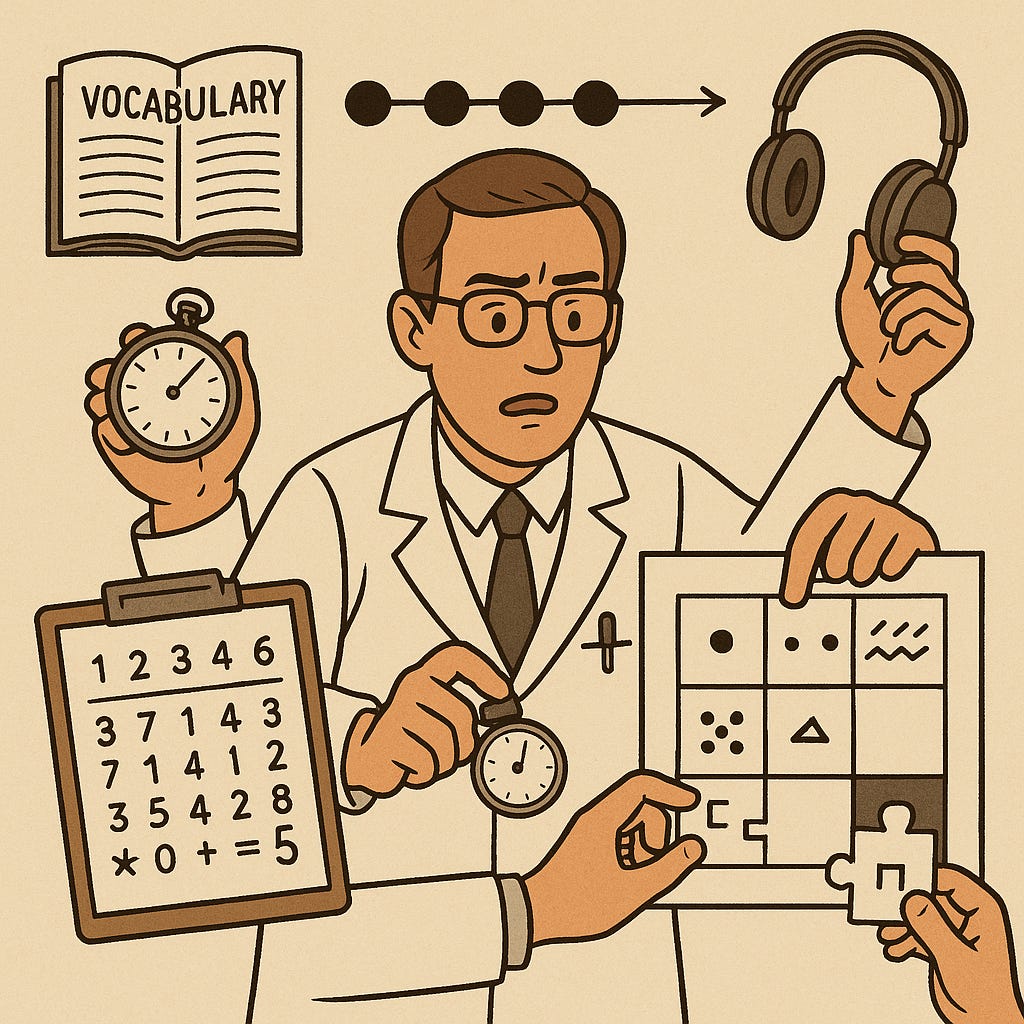

In 1904, Charles Spearman identified the “positive manifold”: individuals who perform well on one cognitive test tend to perform well on others. Applying factor analysis - or extracting the first principal component - to a battery of diverse tests, such as those assessing reasoning, memory span, processing speed, and vocabulary, yields a single latent factor that accounts for their shared variance. This factor is denoted as g. Its magnitude and interpretation vary with the composition of the test battery and the sample, but across datasets, g robustly predicts academic and occupational outcomes by capturing the common variance among the tests.

What concerns me here, however, is substantive intelligence: the capacity to construct and manipulate explanatory structures, involving abstraction, relational integration, and cross-contextual model transfer. This is distinct from g as a statistical construct. The claim I advance is counterintuitive: g, or near-proxies like general knowledge, can in certain contexts proxy this underlying capacity more effectively than a single direct reasoning test—yet g remains separate from the capacity itself.

To understand this, consider what a test battery measures. A standard battery includes novel reasoning items (e.g., matrix problems), speeded tasks (e.g., digit-symbol substitution, reaction or inspection time), short-term memory measures (e.g., digit or visuospatial spans), and crystallized components like vocabulary or general knowledge. Factor analysis derives g as the primary dimension explaining their covariation. Thus, g is a property of the battery’s structure, influenced by task reliability, sample heterogeneity, and shared causal influences—not an inherent attribute of individuals or items.

Now consider the individual generating the scores. Substantive intelligence - manifest in problem-solving and comprehension - accumulates traces over time: efficiency in knowledge acquisition and organization, inclinations toward complexity, and persistence in pattern detection. These manifest in durable outcomes. A general knowledge assessment at age 30 reflects the integration of countless learning episodes. In contrast, a reasoning test administered once captures a momentary performance under constrained conditions. One represents a long-term average; the other, a point estimate. When epistemic exposure is comparable - as in educated adult populations within modern societies - this averaged signal can correlate more strongly with the underlying capacity than a single reasoning trial.

This does not equate knowledge with intelligence. Performance on knowledge tests is sensitive to cultural and opportunity factors; altering surface content while preserving structure can drastically reduce scores. The utility of knowledge as a proxy hinges on epistemic opportunity parity: similar access to education and information allows accumulated knowledge to reflect processing efficiency. Absent this parity - due to linguistic, educational, or cultural differences - the proxy falters. In such cases, reasoning tasks that demand online structure manipulation take precedence. This explains why general knowledge tests excel within homogeneous national samples but exhibit biases across age, nationality, and gender (Kirkegaard et al., 2025).

A further mechanism underlies the clustering of cognitive measures: the heritability of general cognitive ability, which increases with age and is estimated at 0.6–0.8 in adults. Genetics contributes substantially, both directly via neural development and efficiency, and indirectly through niche construction. Individuals with higher capacity tend to select environments that amplify it—engaging in extensive reading, advanced education, cognitively demanding pursuits, and sustained effort on challenges. These gene-environment correlations compound over time, aligning diverse test performances and enhancing g’s predictive validity. g thus encapsulates shared causal dynamics from both the capacity and its amplifying factors. However, high heritability does not confer constitutive status; a speeded coding task may share genetic influences without engaging model construction.

Cognition ≠ Intelligence

This distinction, often overlooked in psychometrics, is critical. Tasks such as digit-symbol substitution, digit span, simple reaction time, inspection time, and basic visuospatial spans effectively index enabling processes like processing speed, attentional control, and buffer capacity. They load substantially on g and covary with outcomes, yet they do not constitute intelligence. A deterministic system with minimal memory can outperform humans on these via lookup, storage, and thresholding—without abstraction, integration, or transfer: for instance, a basic electronic circuit like a photodiode sensor can achieve sub-millisecond reaction times far surpassing human limits; a simple array in a computer program can handle digit spans or N-back tasks of arbitrary length by maintaining a queue without any comprehension; and digit-symbol substitution reduces to a fixed lookup table executed instantaneously by software. Conflating shared variance with essential demands leads to errors, such as equating intelligence with working memory capacity.

Clarifying these boundaries resolves the apparent paradox. g is not substantive intelligence, but a battery incorporating reliable indicators (particularly knowledge) alongside reasoning demands can yield a more stable proxy for the capacity than an isolated reasoning measure. This holds under epistemic parity and battery breadth/reliability. In homogeneous, well-educated samples, knowledge’s temporal integration mitigates noise, and g benefits accordingly. In diverse contexts, knowledge proxies exposure, weakening the link to capacity and emphasizing reasoning’s direct requirements.

AI as an Example

The distinction sharpens with AI systems, which operate under asymmetric data regimes: pretrained on vast corpora, they acquire knowledge decoupled from generative processes. High knowledge performance stems from retrieval rather than reasoning. For AI, knowledge weakly signals intelligence; capacity emerges in transfer tasks requiring compositionality and out-of-distribution adaptation, where storage and speed alone fail.

One lingering question: amid causal mixtures, what qualifies as a measure of intelligence? I propose a proximity principle: a task measures intelligence to the extent that success requires the constitutive capacities—abstraction, relational integration, interference resolution, and model transfer. This is conceptual, not purely statistical. It positions knowledge as an indicator (a downstream product), dependent on exposure. Component tasks are enablers, surpassable by non-intelligent systems. Well-constructed reasoning tasks, resistant to content variation and memorization, qualify as measures, despite single-administration noise.

High heritability accounts for measure covariation, but does not define which tasks embody it. Shared genetics can elevate scores on coding tasks, knowledge, or even peripheral traits like height or myopia, yielding modest correlations. g efficiently summarizes these effects for prediction but remains distinct from the target.

An Analogy

Suppose we assess “true height” via stadiometer, but variability arises from posture, footwear, sleeping position or measurement error. Alternatively, measure multiple limbs - arm span, leg length, foot, neck, fingers - and extract the first factor. This composite, aggregating reliable sub-measures, may exhibit higher test-retest reliability than a single stadiometer reading, greater heritability (due to error reduction), and superior prediction for outcomes sensitive to proportions (e.g., certain athletics)—without being height per se.

Testable Predictions

Convergent validity: The limb factor correlates highly with stadiometer height (r ≥ .85) in standard adult samples.

Reliability gain: Intraclass correlation or Spearman’s ρ for the factor exceeds that of single-session height under variable protocols (difference ≥ .05).

Aggregation effect: The reliability edge diminishes with standardized stadiometer procedures (e.g., barefoot, posture-corrected, averaged trials), converging reliabilities.

Heritability inflation: Twin- or SNP-based h² for the factor surpasses that for raw height due to attenuated error.

Predictive validity shift: For limb-leveraged sports (rowing, basketball), the factor outperforms raw height; for torso-dependent events (some gymnastics), the pattern attenuates or reverses.

Mediation by true stature: The factor’s prediction of outcomes like income is largely mediated by well-measured height, with residuals where proportions add value.

Parity sensitivity: In inconsistent measurement settings (e.g., field surveys), the factor excels; in controlled labs, the advantage vanishes.

Invariance tests: Across groups with differing proportions (sex, ancestry), factor loadings vary; enforcing equality degrades model fit.

Bias under specific conditions: In populations with torso variability (scoliosis), the factor overestimates relative to accurate height.

Incremental validity: In regressions for sport selection, the factor adds ΔR² > 0 beyond standardized height.

Robustness to noise: Error in one limb measure minimally impacts the factor score.

Cross-temporal stability: Over 6–12 months in adults, the factor shows greater stability than unprotocolized height; with protocols, stabilities align.

Finally, a Testable Prediction

Controlling for g-loading and reliability, proximal tasks (structure-dependent, content-invariant) will demonstrate greater parameter stability and measurement invariance across cultures and systems (humans vs. AI) than distal tasks (knowledge or components).

Conclusion

Mapping back to cognition: In select contexts, g derived from multiple reliable indicators can serve as a more stable proxy for substantive intelligence than a single direct measure - valuable for prediction - while remaining distinct from the construct.

Practically, this refines score interpretation. g-loadings reflect shared variance, not inherent essence. Incorporating knowledge enhances stability in parity conditions but embeds exposure assumptions; in heterogeneous settings, the g-capacity link attenuates, prioritizing reasoning demands. Distal correlates (e.g., height, myopia, lifestyle) share etiology with performance but lie outside the construct and battery.

How to Support The Genetic Pilfer

If you enjoy this newsletter and want to help it grow, here are a few ways you can support my work:

💡 Subscribe

👍 Like & Restack

Click the buttons at the top or bottom of the page to increase visibility on Substack.

📢 Share

Forward this post to friends or share it on social media.

Upgrade to Paid

A paid subscription includes:

Full access to all posts and the archive

Exclusive content (apps such as the Population Genetic Distances Explorer or the Polygenic Scores Explorer)

The ability to comment and join the chat

🎁 Gift a Subscription

Know someone who’d enjoy The Genetic Pilfer? Consider giving them a gift subscription.

Your support means a lot - it’s what keeps this project alive and growing.

Thank you,

Davide

References

Kirkegaard, E. O. W., & Jensen, S. (2025). Psychometric Analysis of the Multifactor General Knowledge Test. OpenPsych. https://doi.org/10.26775/op.2025.07.28

Spearman, C. (1904). “”General Intelligence,” Objectively Determined and Measured”. The American Journal of Psychology. 15 (2): 201–292. doi:10.2307/1412107

I think the question reflects a lack of public trust in the statistical judgements factor analysts make.

Somebody asked me for book recommendations and it got me thinking about deconstructing the question. "Books" are just tools for ruminating on a subject, what's cardinal is judgement.

Most books generally aren't very respectful of a reader's time, and aren't honestly representative of how thought actually works. Most people read (and write) "books" merely to grant a body of judgements some codified stamp of finality in order to promote a pretension of "investment" that legitimates those judgements, hence authors like to have a "premise -> conclusion" structure instead of the "conclusion <- premise" structure that's naturally legible to a virgin perspective.

Likewise, the fact that this is even a question is a reflection that factor analytic methods have been made too complex and out of public reach for people to trust them. There should be resources to make it easy for people to play with factor analysis themselves without having to know where to find the required tacit background knowledge.

To me, the most relevant fact about mental test data is that when you construct first-order factors, the relationships between lower-order factors require nothing more than a single higher-order factor to perfectly reconstruct the relationships between factors.

I think it's amazingly clarifying to take a step back and recognize that "measurement" is just structured (and relativized) judgement.